INTRO:

Below is my guide to becoming a data engineer based on the current job market (08/08/2020) demands. I have outlined the TOP 5 foundational skills needed to be successful. I went ahead to give useful resources that I have reviewed and deemed sufficient for your consumption. I also give a realistic time allocation to absorb the material and it's estimated cost. Most of the courses I provided are on Udemy. However, feel free to use any other website. I have another blog about using Youtube.

Please note that taking your time to practice is really crucial when doing self-learning so budget 3 times the course videos duration.

1. Good Foundational Knowledge of SQL Programming (SQL Query writing)

The Complete SQL Bootcamp 2020: Go from Zero to Hero in Udemy

This is a good first step to get you from beginner to intermediate in SQL

Cost: 11-15 dollars

Course time: 9 hrs

Learning Time: 3 weeks (Spending 10 hrs a week)

SQL - Beyond The Basics

This course focuses on advanced concepts that are crucial in getting through most interviews these days.

Cost 11 - 15 dollars

Course time: 5hrs

Learning Time: 1.5 weeks

Course time: 5hrs

Learning Time: 1.5 weeks

2. Knowledge of Python Programming Knowledge (only applies if you do not have any programming background)

a. Beginner to Intermediate Python Course:

Complete Python Bootcamp: From Zero to Hero in Python:

This will give you a good grasp on some fundamentals of coding in Python and object-oriented programming.

https://www.udemy.com/course/complete-python-bootcamp/

Cost 15-20 dollars

Course Time: 24hrs

Learning Time: 1 month

Cost 15-20 dollars

Course Time: 24hrs

Learning Time: 1 month

b. Python for Data Analysis: Numpy, Panda's Dataframe

This free videos in youtube are very comprehensive as it goes over the most popular python libraries used in the real world for data analysis like Pandas, Numpy. Feel free to skip the 4 hr course and jump straight to pandas if you don't have time.

Numpy + Pandas 4 hr course

https://youtu.be/r-uOLxNrNk8

Pandas 1 hr course

https://youtu.be/vmEHCJofslg

Pandas Advanced concepts 1 hr course

https://youtu.be/P_t8LO-KgWM

Numpy + Pandas 4 hr course

https://youtu.be/r-uOLxNrNk8

Pandas 1 hr course

https://youtu.be/vmEHCJofslg

Pandas Advanced concepts 1 hr course

https://youtu.be/P_t8LO-KgWM

c. Python Algorithms and Data Structures (for Mid to Senior Data Engineers)

Python for Data Structures algorithms and interviews

This course is crucial for understanding the fundamentals of software engineering. Please note you have to be at an intermediate level before taking this course. This is essential to get through most coding interviews for mid or senior roles.

https://www.udemy.com/course/python-for-data-structures-algorithms-and-interviews/

Cost 12-15 dollars

Course Time: 17hrs

Learning Time: 1 month

Python for Data Structures algorithms and interviews

This course is crucial for understanding the fundamentals of software engineering. Please note you have to be at an intermediate level before taking this course. This is essential to get through most coding interviews for mid or senior roles.

https://www.udemy.com/course/python-for-data-structures-algorithms-and-interviews/

Cost 12-15 dollars

Course Time: 17hrs

Learning Time: 1 month

3. Good understanding of ETL Computing Engine for Big Data- Spark/Databricks

a. Create a Databricks community edition account so you can have a platform to practice

b. Understand Spark architecture and the overall capabilities of Spark in Scala course:

I have not watched this video course but it promises to go over the in-depth architecture of Spark and Scala (which is the primary language of Spark). Don't worry about Scala because Spark supports SQL and Python so you don't need to be proficient in it.

Spark Essentials

Cost: 11-15 dollars

Course Time: 7.5hrs

Course Learning Time: 3weeks

Course Time: 7.5hrs

Course Learning Time: 3weeks

c. Optional: Pyspark Tutorial- Knowledge of SQL and Python would really make learning Pyspark very easy

Pyspark for Spark

If your SQL is really strong Spark SQL will be sufficient to work in Spark D for most ata Warehousing use cases. Things that you need pyspark for are Spark streaming use cases and machine learning. They can be learned on the job by google searching or take this course

https://www.udemy.com/course/spark-and-python-for-big-data-with-pyspark/

Cost: 11-15 dollars

Course Time: 11hrs

Course Learning Time: 3weeks

If your SQL is really strong Spark SQL will be sufficient to work in Spark D for most ata Warehousing use cases. Things that you need pyspark for are Spark streaming use cases and machine learning. They can be learned on the job by google searching or take this course

https://www.udemy.com/course/spark-and-python-for-big-data-with-pyspark/

Cost: 11-15 dollars

Course Time: 11hrs

Course Learning Time: 3weeks

d. Databricks /Spark Optimization: this is important because a lot of interviews ask about this

Note that if you have good knowledge of SQL and Python you can work a lot with Spark

Video time: 1hrs

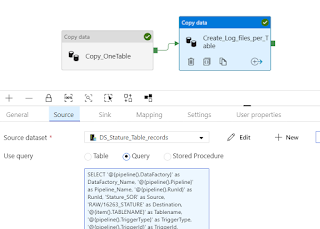

e. Learn a simple ETL tool in Azure- Azure Data Factory

Comprehensive overview playlist

https://www.youtube.com/watch?v=Mc9JAra8WZU&list=PLMWaZteqtEaLTJffbbBzVOv9C0otal1FO

Advanced Data Factory concepts (Parameterization)

https://youtu.be/K5Ak4IdtBCo

4. Cloud Knowledge AWS or AZURE; Get Certified if possible

This will help your resume if you don't have experience. This knowledge is a bonus but also crucial for hitting the ground running on various projects. Most jobs right now are migrating data analytics solutions from one premise to the cloud. Therefore a good grasp on cloud architecture is important.

I would start with

Azure AZ-900: fundamental of Azure

I would start with

Azure AZ-900: fundamental of Azure

https://docs.microsoft.com/en-us/learn/paths/azure-fundamentals/

and Azure Data Solution Services

https://www.youtube.com/watch?v=ohya6zTa1Hg

Optional: Azure Data Engineer

https://docs.microsoft.com/en-us/learn/certifications/azure-data-engineer

and Azure Data Solution Services

https://www.youtube.com/watch?v=ohya6zTa1Hg

Optional: Azure Data Engineer

https://docs.microsoft.com/en-us/learn/certifications/azure-data-engineer

AWS Solution Architect: Fundamentals of AWS

https://www.youtube.com/watch?v=k1RI5locZE4

and AWS Data Engineer

https://www.youtube.com/watch?v=8EcQ7x-9pHA

and AWS Data Engineer

https://www.youtube.com/watch?v=8EcQ7x-9pHA

Pick one

Total Course Hours: 6-10 hrs

My estimated Learning Time: 3 week

PS: I don't have much knowledge of AWS. I only know Azure

PS: I don't have much knowledge of AWS. I only know Azure

5. Basics of Data Modelling: For Data engineers that need to work more with Business intelligence use cases

Data warehousing and Data modeling is good knowledge for data engineers that will help deliver efficient analytics solutions. Normally, you will gain this knowledge with experience. however, if you really want to differentiate yourself at the job. It is good to learn it.

Data Modelling Fundamentals

https://www.udemy.com/course/mastering-data-modeling-fundamentals/

Cost 13 dollars

Course Time: 3hrs

Data Warehousing Fundamentals

https://www.youtube.com/watch?v=J326LIUrZM8

https://youtu.be/lWPiSZf7-uQ

https://www.udemy.com/course/mastering-data-modeling-fundamentals/

Cost 13 dollars

Course Time: 3hrs

Data Warehousing Fundamentals

https://www.youtube.com/watch?v=J326LIUrZM8

https://youtu.be/lWPiSZf7-uQ

Total Hours: 8 hrs

My estimated Learning hours: 2 weeks

6. Relational Database Concepts and Fundamentals

Database LessonsThis Youtube playlist goes over the core concepts of a relational database like ACID property, indexes, etc. It is important for Engineers working with data stored in relational databases.

https://www.youtube.com/playlist?list=PL1LIXLIF50uXWJ9alDSXClzNCMynac38g

Senior/Advanced level Data Engineers need the below as well. ( note that I am not there yet)

7. Hadoop Architecture and Ecosystem

Hadoop Developer In Real World

This is a really good course that covers the essential things needed for working as a data engineer in the big data space. It covers the most useful aspects of the Hadoop ecosystem that is utilized in the real world. For example Big data file formats, Hive, Spark, etc.

https://www.udemy.com/course/hadoopinrealworld/

8. Build real-time Analytics Pipeline with Kafka/Event Hubs and Spark Streaming

https://www.udemy.com/course/kafka-streams-real-time-stream-processing-master-class/9. Massively Parallel Processing Databases (Snowflake, Azure DW, Redshift, Netezza, Teradata)

https://youtu.be/NUGcAUyQY-k

10. Working with NOSQL database (MongoDB, CosmosDB)

https://www.udemy.com/course/learn-mongodb-leading-nosql-database-from-scratch/11. Working with Graph Databases

https://www.udemy.com/course/neo4j-foundations/12. Python for Data Science and Machine Learning

This course is important for Data Engineering roles that involve working also as a Data Scientist. This course will go over the machine learning models and techniques.

https://www.udemy.com/course/python-for-data-science-and-machine-learning-bootcamp/

Cost 12-15 dollars

Course Time: 24hrs

Learning Time: 3 weeks (skip some parts not relevant)

Cost 12-15 dollars

Course Time: 24hrs

Learning Time: 3 weeks (skip some parts not relevant)

Summary

Getting into a Data Engineering career is not easy but I believe hard work and dedication can get you there. If you dedicate 3 months of the absolute focus of learning for 4 to 5 hrs a day or 30 hrs a week, you can master most of these fundamental skills and start getting entry-level jobs

I would spend 1 month in SQL,1 month in Python and last month in Spark, Cloud, and Data Modelling

Please reach to me on LinkedIn for more questions and follow me as well

There is a lot more to learn and I have another blog that listed comprehensively all the various tools and technologies a Data Engineer could have http://plsqlandssisheadaches.blogspot.com/2020/01/how-i-transitioned-to-data-engineer-as.html

#dataengineer #datascience #bigdataengineer #dataengineering